Thanks to its deep learning algorithms, FakeApp recognizes the faces present in the videos and allows you to replace them with the faces of other people. Here’s how it works.

In recent days, there has been a lot of talk about FakeApp, an application written in Java that was born for fun but demonstrates how even videos can soon be easily manipulated.

FakeApp sets a real milestone because it demonstrates how using deep learning. It is possible to change a face depicted in a video by replacing it with another person’s face.

Videos are composed of frames, images that combined form a filmed sequence. What would happen if software or rather an artificial intelligence could recognize the face of the same person, replacing it with the images of another subject? That a person could be portrayed in poses in which he has never posed and perform actions that have never really been realized.

The beauty (or the ugly, depending on your point of view …) of FakeApp is that the program recognizes and replaces faces using medium-power hardware, such as the one that makes up PCs generally used by gamers.

Furthermore, the face’s images are dynamic and what you get is a surrogate for the original video, a fake version that – especially in the most successful creations – really seems to portray another person. The video that we propose below seems to portray the British actress Daisy Ridley. In reality, the body is not his: his face, with the various expressions, was superimposed on that of another subject using FakeApp.

To use FakeApp, currently hosted on MEGA servers, you need a PC equipped with an NVidia graphics card, CUDA 8.0 drivers, and the well-known FFmpeg software.

After having “trained” FakeApp by proposing the faces of the two subjects (the one present in the original video and the one to be replaced), the application will proceed with the “assembly” and production of the final video.

Of course, a lot of effort and patience is needed to get quality results, but the author of FakeApp has concretely demonstrated what can be done with his “creature.”

The keystone lies in choosing images “compatible” with the video on which you want to intervene.

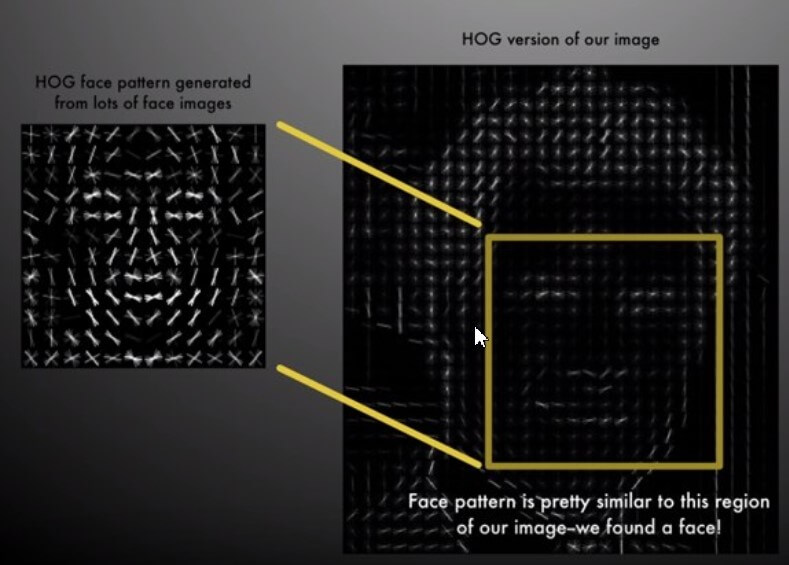

Oriented gradient histogram: this is a mechanism used in computer vision and image processing for automatic object recognition. The technique counts the occurrences of the gradient orientation in localized portions of an image. In other words, it checks how the pixels in an image are arranged by examining their common characteristics.

By comparing the information collected with an image archive, it is possible to collect similar and comparable characteristics.

Using operations such as convolution and pooling, real milestones in applications for deep learning, the images are classified, and it is possible to select those that will allow obtaining the best result.

The mechanism is managed through autoencoders capable of encoding a representation of the images that can be used to compose the final video.

In the future, it will not be necessary to have a nose only for fake news, not only will you have to develop skills in recognizing photomontages (we talked about them in the same article on fake news ) but one will have to be increasingly critical and doubtful also concerning the reliability of the videos published on the net.

If FakeApp can produce truly amazing quality videos, imagine what can be achieved with more powerful hardware and above all by exploiting the power of shared resources on the cloud from various providers such as Google, Amazon, and Microsoft.

I’m Mr.Love . I’m admin of Techsmartest.com